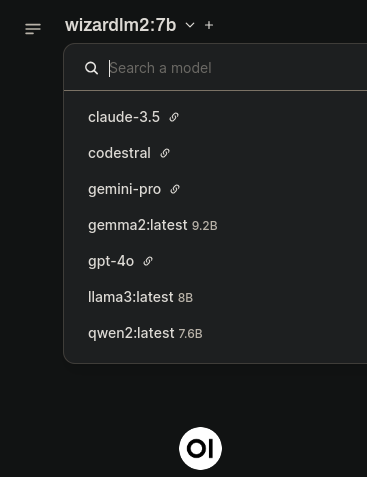

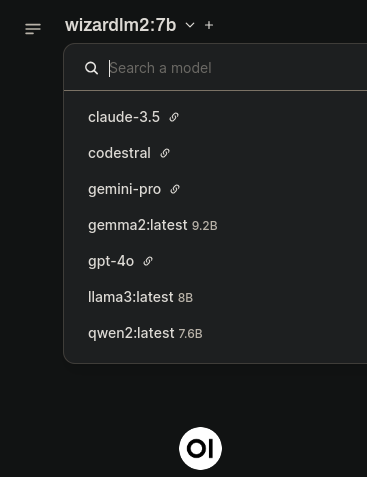

pleblee on Nostr: local llms with ollama + open-webui + litellm configured with some apis the free ...

local llms with ollama + open-webui + litellm configured with some apis

the free Claude 3.5 sonnet is my daily driver now. When I run out of free messages, I switch over to open-webui and have access to various flagship models for a few cents. The local models usually suffice when I'm asking a dumb question

Here's my `shell.nix`:

```

{ pkgs ? import <nixpkgs> {} }:

(pkgs.buildFHSEnv {

name = "simple-fhs-env";

targetPkgs = pkgs: with pkgs; [

tmux

bash

python311

];

runScript = ''

#!/usr/bin/env bash

set -x

set -e

source .venv/bin/activate

tmux new-session -d -s textgen

tmux send-keys -t textgen "open-webui serve" C-m

tmux split-window -v -t textgen

tmux send-keys -t textgen "LITELLM_MASTER_KEY=hunter2 litellm --config litellm.yaml --port 8031" C-m

tmux attach -t textgen

'';

}).env

```

And here's `litellm.yaml`:

```

model_list:

- model_name: codestral

litellm_params:

model: mistral/codestral-latest

api_key: hunter2

- model_name: claude-3.5

litellm_params:

model: anthropic/claude-3-5-sonnet-20240620

api_key: sk-hunter2

- model_name: gemini-pro

litellm_params:

model: gemini/gemini-1.5-pro-latest

api_key: hunter2

safety_settings:

- category: HARM_CATEGORY_HARASSMENT

threshold: BLOCK_NONE

- category: HARM_CATEGORY_HATE_SPEECH

threshold: BLOCK_NONE

- category: HARM_CATEGORY_SEXUALLY_EXPLICIT

threshold: BLOCK_NONE

- category: HARM_CATEGORY_DANGEROUS_CONTENT

threshold: BLOCK_NONE

```

Published at

2024-07-18 18:00:16Event JSON

{

"id": "dca15c9046681d8eada8c9cbe65dae1de90b022ce5ffc822cccd5d2ac15c34a4",

"pubkey": "69a0a0910b49a1dbfbc4e4f10df22b5806af5403a228267638f2e908c968228d",

"created_at": 1721325616,

"kind": 1,

"tags": [],

"content": "https://bitcoiner.social/static/attachments/YSC0uw_open-webui.png\n\nlocal llms with ollama + open-webui + litellm configured with some apis\n\nthe free Claude 3.5 sonnet is my daily driver now. When I run out of free messages, I switch over to open-webui and have access to various flagship models for a few cents. The local models usually suffice when I'm asking a dumb question\n\nHere's my `shell.nix`: \n\n```\n{ pkgs ? import \u003cnixpkgs\u003e {} }:\n(pkgs.buildFHSEnv {\n name = \"simple-fhs-env\";\n targetPkgs = pkgs: with pkgs; [\n tmux\n bash\n python311\n ];\n runScript = ''\n #!/usr/bin/env bash\n set -x\n set -e\n source .venv/bin/activate\n tmux new-session -d -s textgen\n tmux send-keys -t textgen \"open-webui serve\" C-m\n tmux split-window -v -t textgen\n tmux send-keys -t textgen \"LITELLM_MASTER_KEY=hunter2 litellm --config litellm.yaml --port 8031\" C-m\n tmux attach -t textgen\n '';\n}).env\n```\n\nAnd here's `litellm.yaml`: \n\n```\nmodel_list:\n - model_name: codestral\n litellm_params:\n model: mistral/codestral-latest\n api_key: hunter2\n - model_name: claude-3.5\n litellm_params:\n model: anthropic/claude-3-5-sonnet-20240620\n api_key: sk-hunter2\n - model_name: gemini-pro\n litellm_params:\n model: gemini/gemini-1.5-pro-latest\n api_key: hunter2\n safety_settings:\n - category: HARM_CATEGORY_HARASSMENT\n threshold: BLOCK_NONE\n - category: HARM_CATEGORY_HATE_SPEECH\n threshold: BLOCK_NONE\n - category: HARM_CATEGORY_SEXUALLY_EXPLICIT\n threshold: BLOCK_NONE\n - category: HARM_CATEGORY_DANGEROUS_CONTENT\n threshold: BLOCK_NONE\n```",

"sig": "e0d573d1d5a9eb1e8119e43159a6ff7249dc95244b0842c15b107033a75c2aec1eb631f2725a20ea36157f4ab8637ff64d49b23fda991e4b6cc3ded2586a2dc4"

}