Using tools like Continue in your vault

As the capabilities of artificial intelligence continue to expand, integrating AI into our workflows has become an increasingly attractive option. One of the most exciting applications of AI is its potential to enhance the way we manage and organize information. Obsidian, a powerful knowledge management tool, provides a robust platform for building a personal knowledge base. By layering AI tools like Continue over your Obsidian vault, you can unlock new levels of efficiency, creativity, and insight. This article will start to explore how to effectively use AI tools within Obsidian to enhance your note-taking, information management, and productivity.

An Explosion of AI Tools

Over the past few years, I have tinkered with ChatGPT and other chat interfaces, but I only recently stumbled upon the rapidly growing space of AI tools for coding. In such a short time, some amazing tools have emerged, most notably:

- Aider: A command line interface tool to iteratively generate, edit, and refactor code.

- Cursor: A fork of Visual Studio Code (VS Code) with integrated AI functionality.

- Cline: A plug-in to for command line and code editor built to work with Antropic’s Claude 3.5.

- Continue: A customizable VS Code plug-in with chat and code editing abilities.

These tools are designed to assist developers in writing, debugging, and even generating code from natural language prompts. They are powered by large language models (LLMs) like GPT-4 and Llama 3, which are trained on vast amounts of text data and can produce human-like responses to a wide range of prompts.

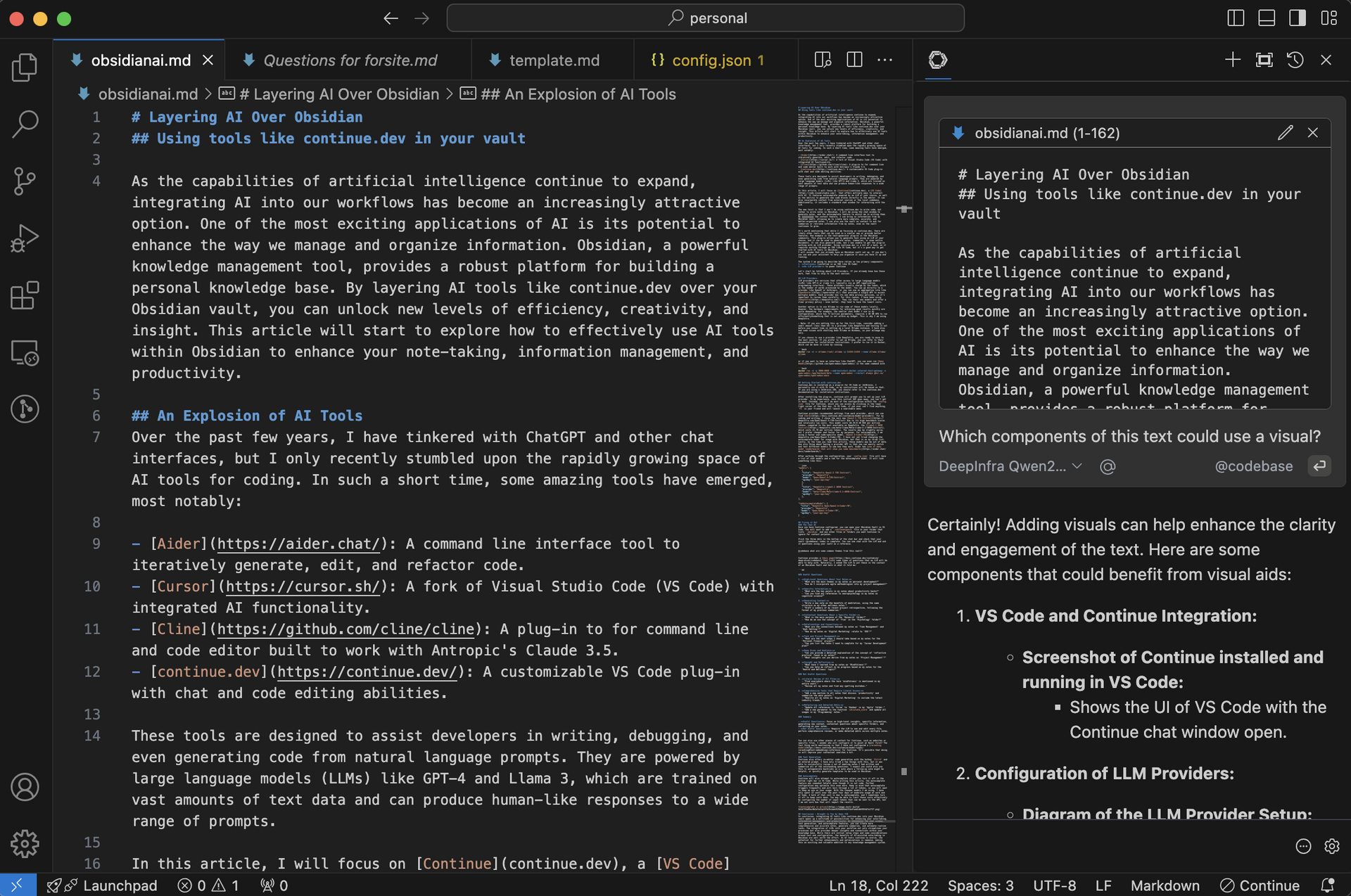

In this article, I will focus on Continue, a VS Code that offers multiple ways to interact with AI. It features autocomplete powered by an LLM of your choice, as well as the ability to generate new code blocks directly in the editor. It can also incorporate context from external sources or the local codebase. Additionally, it includes a standard chat window for interacting with the LLM.

The new twist is that I won’t be using Continue to write code, but rather to write notes in Obsidian. I will be using the chat window to generate notes, and the autocomplete feature to assist me in writing them. By leveraging the context feature, I can bring in information from my Obsidian vault, allowing me to create more complete, accurate, and better-organized notes. I can also use the vault as context to ask for summaries or retrieve information from my notes, even as the vault continues to grow.

It’s worth mentioning that while I am focusing on Continue, there are likely other tools that can be used in a similar way or provide better features. One example is the text-generator plug-in for Obsidian. This plug-in allows you to generate text using an LLM of your choice, and it can be used to generate notes, summaries, or even entire documents. It can also generate code, but I was unable to get the plug-in working with my LLM provider. Using Continue is a bit of a hack, as it requires working through an IDE like VS Code, but it’s a good way to get started with AI tools in Obsidian. I will assume that you already have an Obsidian vault set up. If you don’t, you can ask your assistant to help you organize it once you have it up and running.

The system I am going to describe here relies on two primary components:

- Continue (installed in an IDE like VS Code)

- An LLM provider to power Continue

Let’s start by talking about LLM Providers. If you already know how these work, feel free to skip to the next section.

LLM Providers

LLM providers are services that offer access to large language models (LLMs) like GPT-4 or Llama 3.1, typically via an API (application programming interface). These providers usually charge by the token, which is the number of words processed by the model. You can go directly to a provider like OpenAI or Anthropic, or you can use an aggregation site like OpenRouter that provides a single API to access multiple models. Each provider has its own data privacy policies, so it’s important to review them carefully. For this reason, I have been using DeepInfra. They run their own models and offer a clear privacy policy - even better, they tend to have the lowest costs.

h/t Yarik Sychov 🫂 (npub17vh…nhkq) note1qfc…scxa

Another option is to use Ollama to run some of these models locally. However, the hardware requirements for achieving good results quickly are quite demanding. For example, the smaller chat model I use in my configuration, which has 72 billion parameters, requires a 48 GB GPU to run efficiently—something that is not within my budget. This is why I am using DeepInfra.

Note: If you are setting this up for the first time, consider adding a small amount (less than $5) to a provider like DeepInfra and testing it out before you invest time in setting up a local Ollama instance. I have also had some issues with shutting down Ollama on Windows, so your mileage may vary.

If you choose to use a provider like DeepInfra, you are ready to move to the next section. If you prefer to set up Ollama, you can refer to their documentation for installation instructions. I prefer to run it in Docker, which can be done in Linux by running

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

or if you want to have an interface like ChatGPT, you can even run Open WebUI in the same command with

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Getting Started with Continue

Continue is installed as a plug-in for VS Code or JetBrains. I personally use it with VS Code, so my instructions will be based on that. If you are using a JetBrains IDE, you should refer to the Continue documentation for installation instructions.

After installing the plug-in, continue will prompt you to set up your LLM provider. In my experience, once this initial GUI goes away, you can’t get it back. Instead, you will do most of the configuration within the config.json file for Continue, which you can access by clicking in the lower right corner of the Chat box. In VS Code, if you ever can’t find anything, F1 is your friend and will launch a searchable menu.

Continue provides recommended settings from each provider, which you can find here. For my coding and writing, I chose the very new Qwen2.5 72B Instruct due to its high benchmark scores and relatively low costs. This model costs \(0.35-0.40 USD per million tokens, compared to the best available on DeepInfra, the [Llama3.1 405B Instruct](https://deepinfra.com/meta-llama/Meta-Llama-3.1-405B-Instruct), which costs \)1.78 per million tokens. The results may be slightly worse, but I prefer cheaper and faster for my purposes. For autocomplete, I am using a faster and code-specific model, Qwen2.5-Coder-7B. I have not yet tried changing the autocomplete model for usage with Obsidian, but that is on my to-do list. Lastly, by the time you read this article, these models may be out of date. One nice thing about having a provider API is that you can easily switch and test different models to see how they work. Aider has one of many model leaderboards that will show you some benchmarks.

After working through the configuration, your config.json file will have a list of chat models and a tab for the autocomplete model. It will look something like this:

"models": [

{

"title": "DeepInfra Qwen2.5 72B Instruct",

"provider": "deepinfra",

"model": "Qwen/Qwen2.5-72B-Instruct",

"apiKey": "your-api-key"

},

{

"title": "DeepInfra Llama3.1 405B Instruct",

"provider": "deepinfra",

"model": "meta-llama/Meta-Llama-3.1-405B-Instruct",

"apiKey": "your-api-key"

},

],

"tabAutocompleteModel": {

"title": "DeepInfra Qwen/Qwen2.5-Coder-7B",

"provider": "deepinfra",

"model": "Qwen/Qwen2.5-Coder-7B",

"apiKey": "your-api-key"

}

Trying it Out

The Chat UI

Once you have Continue configured, you can open your Obsidian Vault in VS Code. You will want to add a .continueignore file in your folder that lists .obsidian and any other files or folders you want Continue to ignore for context purposes.

Click the three dots in the bottom of the chat bar and check that your vault (@codebase) index is complete. You can now chat with the LLM and ask it questions using your vault as a reference.

@codebase what are some common themes from this vault?

Continue provides a docs page that lists some types of questions that an LLM will be able to help with. Naturally, I asked the LLM to put these in the context of an Obsidian Vault and here is what it told me:

### Useful Questions

1. **High-Level Questions About Your Notes:**

- "What are the main themes in my notes on personal development?"

- "How do I incorporate agile methodologies into my project management?"

2. **Specific Information:**

- "What are the key points in my notes about productivity hacks?"

- "Can you find any references to neuropsychology in my notes on cognitive science?"

3. **Generating Content:**

- "Write a new note on the benefits of meditation, using the same structure as my other wellness notes."

- "Draft a summary of my recent project retrospective, following the format of my previous summaries."

4. **Contextual Questions About a Specific Folder:**

- "What is the main purpose of the 'Research' folder?"

- "How do we use the concept of 'flow' in the 'Psychology' folder?"

5. **Relationships and Connections:**

- "What are the connections between my notes on 'Time Management' and 'Goal Setting'?"

- "How do my notes on 'Digital Marketing' relate to 'SEO'?"

6. **Task and Project Management:**

- "What are the next steps I should take based on my notes for the 'Personal Finance' project?"

- "Can you list the tasks I need to complete for my 'Career Development' plan?"

7. **Deep Dives and Analysis:**

- "Can you provide a detailed explanation of the concept of 'reflective practice' found in my notes?"

- "What insights can you derive from my notes on 'Project Management'?"

8. **Insight and Reflection:**

- "What have I learned from my notes on 'Mindfulness'?"

- "Can you help me reflect on my progress based on my notes for the 'Health and Wellness' topic?"

### Not Useful Questions

1. **Literal Review of All Files:**

- "Find everywhere where the term 'mindfulness' is mentioned in my entire vault."

- "Review all my notes and find any spelling mistakes."

2. **Comprehensive Tasks that Require Literal Access:**

- "Add a new section to all notes that discuss 'productivity' and summarize the main points."

- "Rewrite all my notes on 'Digital Marketing' to include the latest industry trends."

3. **Refactoring and Detailed Edits:**

- "Update all references to 'Scrum' to 'Kanban' in my 'Agile' folder."

- "Add a new parameter to the function `calculate_score` and update all usages in my 'Programming' notes."

### Summary

- **Useful Questions**: Focus on high-level insights, specific information, generating new content, contextual questions about specific folders, and reflecting on your notes.

- **Not Useful Questions**: Require the LLM to see and edit every file, perform comprehensive reviews, or make detailed edits across multiple notes.

Text Generation

Continue also offers in-editor code generation with the hotkey Ctrl+I and an entered prompt. I have only tried a few things with this, but it was able to successfully review a set of meeting notes I had written and summarize all of the outstanding questions. I expect you could also use this to autogenerate backlinks on a note where a follow-up note might be helpful, or quickly generate templates to be used in Obsidian. I ended up editing this entire article by selecting sections and prompting the AI with the following: “You are a proficient writer. Please edit this text for style, typos, spelling, and grammar.”

Autocomplete

Continue will also attempt to autocomplete unless you turn it off in the bottom right bar in VS Code. While writing this article, the autocomplete feature was somewhat helpful even though it is not code. Further configuration may optimize this even more. Keep in mind that autocomplete triggers frequently and will burn through a lot of tokens, so you will want to keep an eye on your usage. With the cheaper models I am using, I have only spent 10 cents over the past four days of moderate usage at work and at home. A bulk of that cost is due to autocomplete, and I sometimes turn it off to keep costs down. You may be able to limit these costs effectively by configuring the number of input tokens that can be sent to the API, but I am not sure how that will impact the results.

Conclusion - Brought to You by Qwen 72B

In conclusion, integrating AI tools like Continue into your Obsidian vault opens up a multitude of possibilities for enhancing your note-taking, information management, and productivity. By leveraging the chat window, text generation, and autocomplete features, you can create more comprehensive and accurate notes, generate summaries, and automate routine tasks. The integration of LLMs into your workflow not only streamlines your processes but also provides deeper insights and connections within your knowledge base. While there are initial setup steps and some considerations around cost and configuration, the benefits of AI-assisted note-taking in Obsidian are well worth the effort. As AI tools continue to evolve, the potential for further enhancements and optimizations is immense, making this an exciting and valuable addition to any knowledge management system.