jb55 on Nostr: As performance optimization enjoyer i can’t help but look at the transformer ...

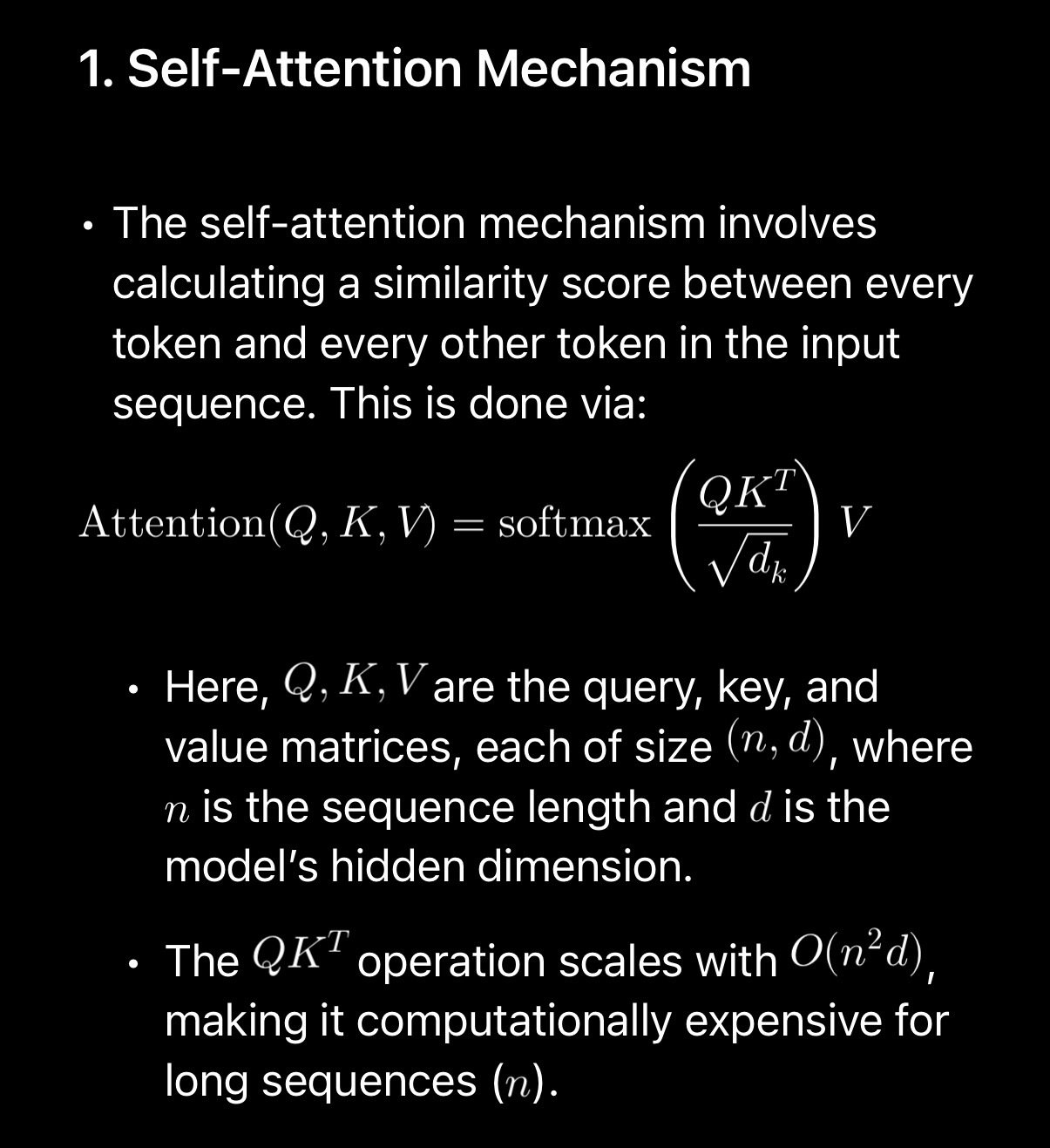

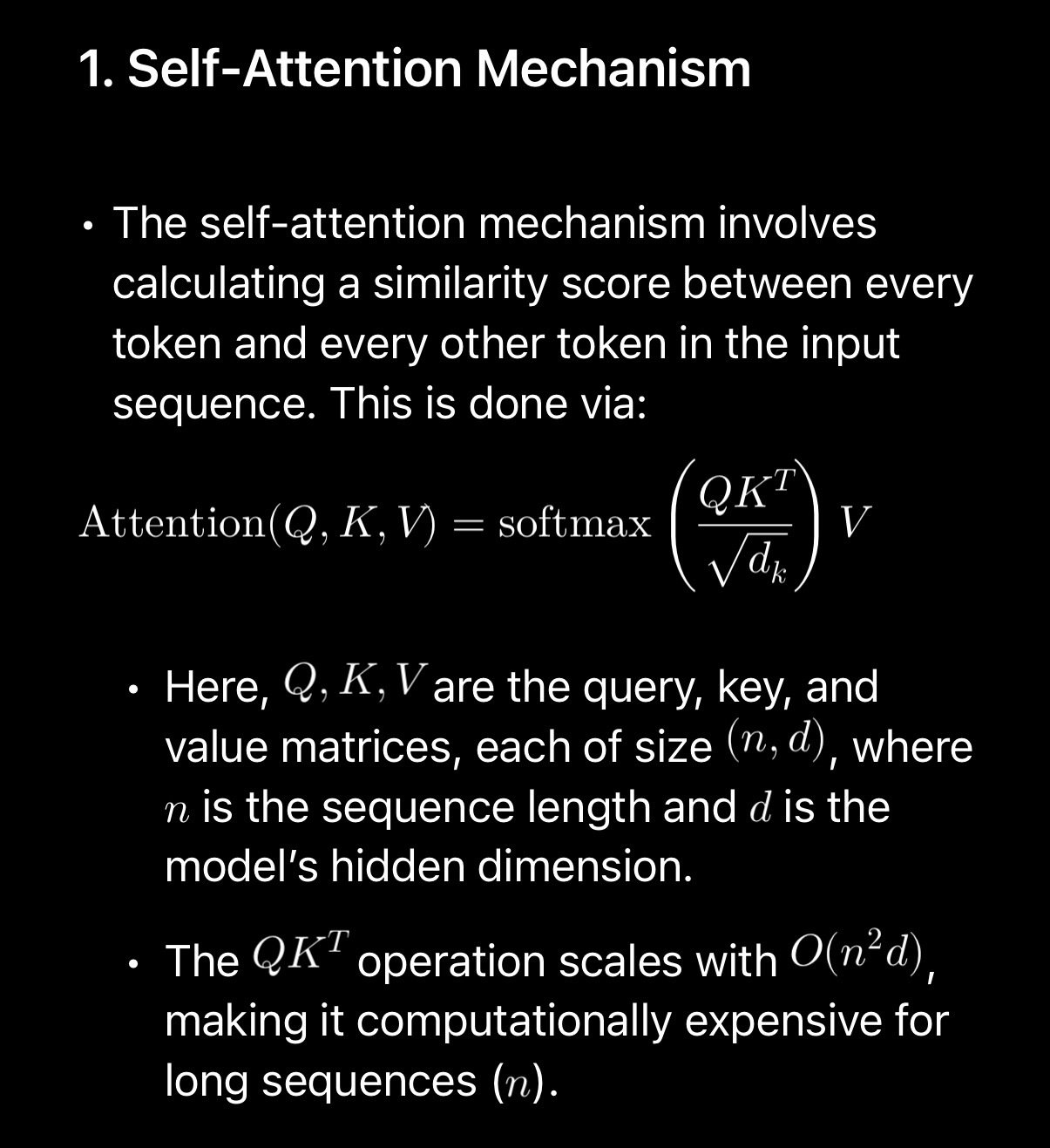

As performance optimization enjoyer i can’t help but look at the transformer architecture in LLMs and notice how incredibly inefficient they are, specifically the attention mechanism.

Looks like i am not the only one who has noticed this and it seems like people are working on it.

https://arxiv.org/pdf/2406.15786Lots of ai researchers are not performance engineers and it shows. I suspect we can reach similar results with much less computational complexity. This will be good news if you want to run these things on your phone.

Published at

2024-11-17 12:35:22Event JSON

{

"id": "4e92e4c01706c138719a54464aefa28f363d651c9a4b284864af61894d3c6d4b",

"pubkey": "32e1827635450ebb3c5a7d12c1f8e7b2b514439ac10a67eef3d9fd9c5c68e245",

"created_at": 1731846922,

"kind": 1,

"tags": [

[

"imeta",

"url https://i.nostr.build/LFm0CEhOtaSXV5rq.jpg",

"blurhash e25q|s%f00M{9Ft7t7oft7j[%MxuM{Rjayxuj[M{j[of9Fofxuoft7",

"dim 1205x1317"

],

[

"r",

"https://arxiv.org/pdf/2406.15786"

],

[

"r",

"https://i.nostr.build/LFm0CEhOtaSXV5rq.jpg"

]

],

"content": "As performance optimization enjoyer i can’t help but look at the transformer architecture in LLMs and notice how incredibly inefficient they are, specifically the attention mechanism. \n\nLooks like i am not the only one who has noticed this and it seems like people are working on it.\n\nhttps://arxiv.org/pdf/2406.15786\n\nLots of ai researchers are not performance engineers and it shows. I suspect we can reach similar results with much less computational complexity. This will be good news if you want to run these things on your phone. https://i.nostr.build/LFm0CEhOtaSXV5rq.jpg ",

"sig": "061e8ec78331412f9356635b2a9d5d1472992f19103b9b30ebe361be47bd92fe2bce7dfc23d43e9dcfa494094e72022ef94d398da035f0bb0eff6eb11bc617f8"

}