jb55 on Nostr: I've setup ollama.jb55.com as my AI model server. my own private GPU-powered coding ...

I've setup ollama.jb55.com as my AI model server. my own private GPU-powered coding agent over wireguard that I can use anywhere. so cool.

I've also setup n8n.jb55.com as an AI workflow/CI server, going to build MCP/goose tools where my agents can trigger specific workflows for me.

Example 1: "hey goose, can you start a code review for prs #42 and #920?

Action:

1. n8n workflow that fetches the PR

2. passes the diffs to the local LLM for review

3. sends nostr node to my local relay so that I am notified.

Example 2: "hey goose, can you tell me if I have any important looking emails from my team"

Action: call `notmuch search query:work` and read my damus work emails, summarizing the most important ones for me

After this I am going to try to implement hotword detection so I can just say "hey goose" and then have it listen to commands so I don't have to type them. All with local LLMs!

super fun!

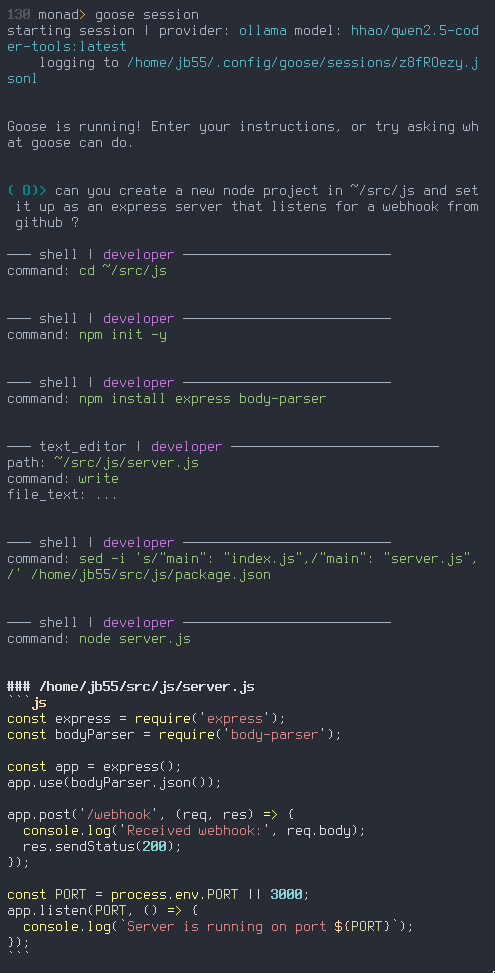

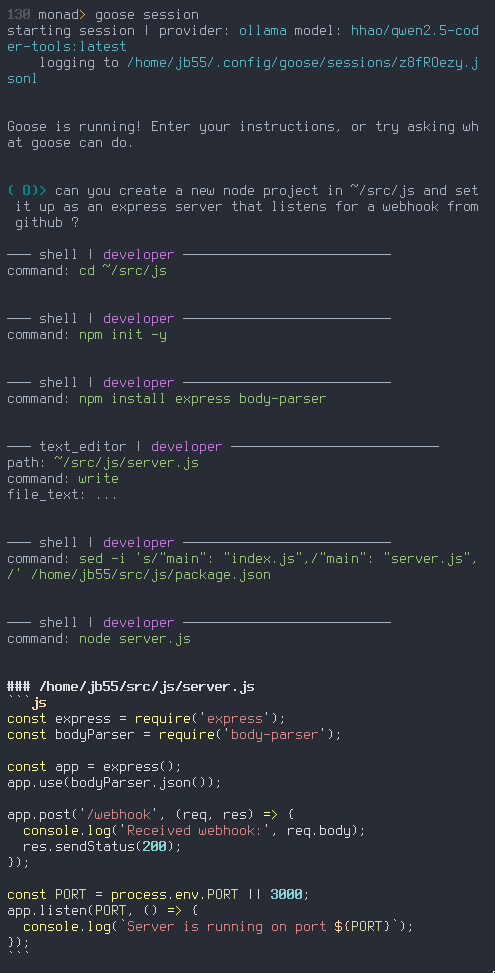

I'm running this qwen 7b param model for a local coding-ai goose agent, seems to be working ok, at least for boilerplate stuff so far.

https://ollama.com/hhao/qwen2.5-coder-tools

Published at

2025-02-18 23:49:24Event JSON

{

"id": "43994265bd685e2e06e3baf4bca6d93a4bd79ad717865bf5a8aa11a4e51f5e92",

"pubkey": "32e1827635450ebb3c5a7d12c1f8e7b2b514439ac10a67eef3d9fd9c5c68e245",

"created_at": 1739922564,

"kind": 1,

"tags": [

[

"t",

"920"

],

[

"t",

"42"

],

[

"q",

"80ce9dc0ab6b3b0e62867c4314b4b22f261b76ba7662af716d581daf65726099"

],

[

"p",

"32e1827635450ebb3c5a7d12c1f8e7b2b514439ac10a67eef3d9fd9c5c68e245"

]

],

"content": "I've setup ollama.jb55.com as my AI model server. my own private GPU-powered coding agent over wireguard that I can use anywhere. so cool.\n\nI've also setup n8n.jb55.com as an AI workflow/CI server, going to build MCP/goose tools where my agents can trigger specific workflows for me.\n\nExample 1: \"hey goose, can you start a code review for prs #42 and #920?\nAction: \n1. n8n workflow that fetches the PR\n2. passes the diffs to the local LLM for review\n3. sends nostr node to my local relay so that I am notified.\n\nExample 2: \"hey goose, can you tell me if I have any important looking emails from my team\"\nAction: call `notmuch search query:work` and read my damus work emails, summarizing the most important ones for me\n\nAfter this I am going to try to implement hotword detection so I can just say \"hey goose\" and then have it listen to commands so I don't have to type them. All with local LLMs!\n\nsuper fun!\nnostr:note1sr8fms9tdvasuc5x03p3fd9j9unpka46we327utdtqw67etjvzvsd9phxr",

"sig": "89d65b2041e49e2d683ea800f6c2a05cdb31b17413f6a04e77d58c8bb3a04240f7dff281df67953c355dd608afe21e184b556c4e23e64d4057e2001752edd88c"

}