Pharma and AI for biology will not advance as quickly as seems to be consensus imo. That isn’t to say there won’t be progress, there will. But legacy discovery pipelines will take longer to replace than people think for a variety of reasons.

AI is heavily data dependent. Everyone saw the impact of Alphafold and overextrapolated the knock on effects for the rest of biotech. However, Alphafold is an exception that proved the rule. The protein data bank was exceptionally well structured, narrowly defined, and large. The ~123k structures in the PDB sample a significant fraction of the most relevant and easy to crystallize protein structures, so out of distribution structure are difficult to characterize and invalidate model predictions. AI is great at interpolating within a data distribution, and so predicting new easy-to-crystallize structures was bound to work eventually. No other database for biochemistry or cell biology is even close to as densely sampled, organized, or defined.

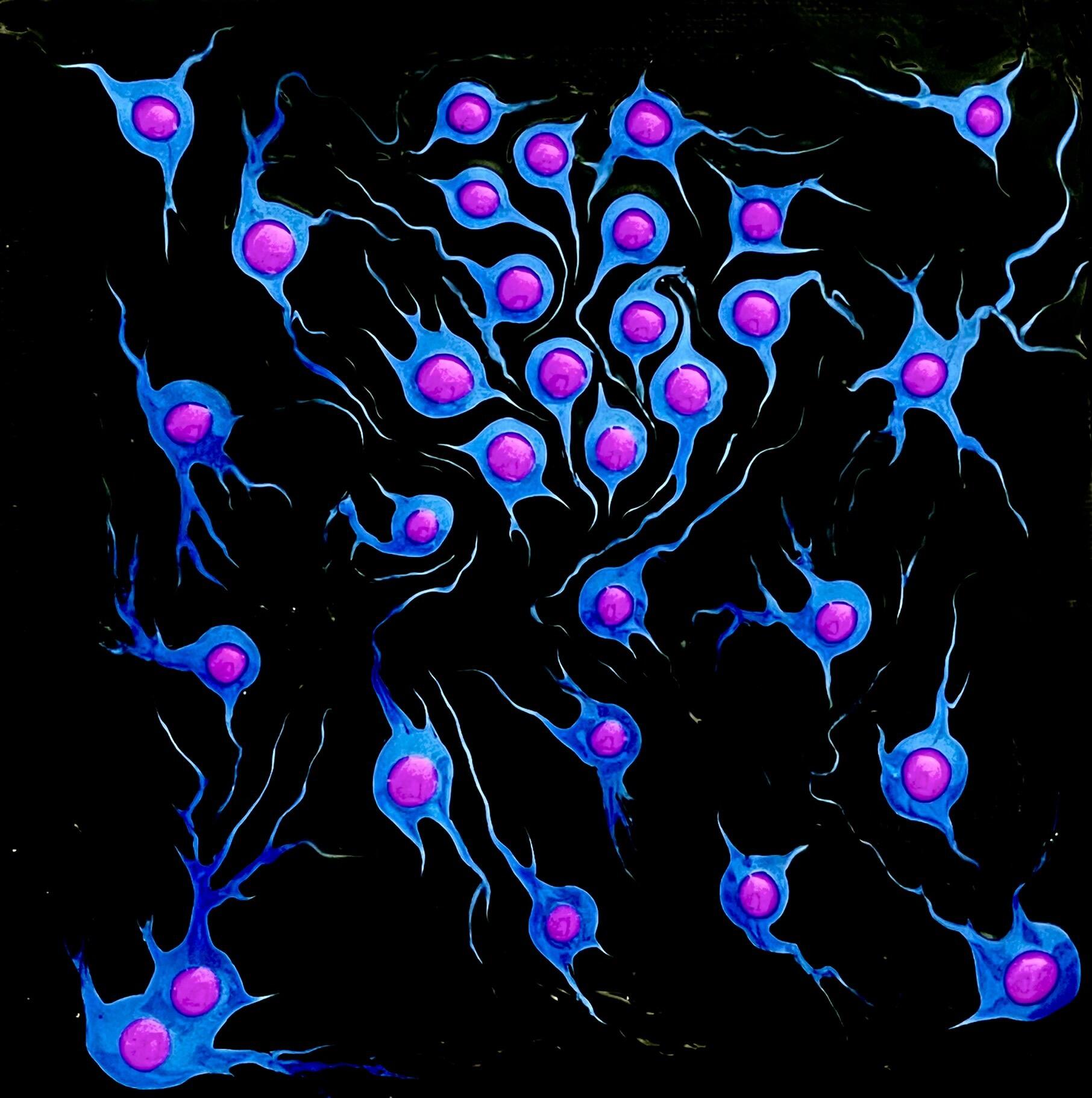

The main limitation is that large biochemical systems are incredibly difficult to simulate AND interrogate. The tools are bad, the readouts are bad, and the combinatorial explosion of interaction space is impossible to simulate on classical systems. Even though sequencing based technologies have revealed a lot of new biology, they are a static and destructive endpoint. I fear that it may be near-impossible and scientifically important to get a comprehensive spatial measurement over time in the same system.

The last limitation I’ll mention is that every discovery in pharma makes subsequent discoveries more difficult (referred to as eroom’s law). This is fundamentally at odds with normal tech and suggests hard limits on our ability to interrogate living systems. If this proves true, progress in biology will lag worse than people expect.

What I think will happen is better scientific automation. “AI scientists” will automate hypothesis generation and testing. Robotics will automate and scale up brute force screening. Humans will build better in vitro models (e.g. organoids) and better measurement tools. Testing will get cheaper as tech gets better — trial and error can scale up a few more orders of magnitude. What do we get from this? There will probably a few more “wonder drugs” where we don’t understand the complete mechanism but trials demonstrate efficacy. I suspect dietary science will be a significant beneficiary from cheaper testing (democratizing access to pharma scale resources for less profitable applications).

cellsoftheseus / Evan

npub1uc…klg26

2024-12-27 19:58:01

in reply to nevent1q…a8ju

Author Public Key

npub1uc4qupy977nww6z5pys66xresp2gnepjn4n4x7t7j7qdncp0su4qvklg26Published at

2024-12-27 19:58:01Event JSON

{

"id": "c8528e277a475bcb1793be040865a53a28698f92ab7b45cd922a7b57813fdae5",

"pubkey": "e62a0e0485f7a6e768540921ad1879805489e4329d6753797e9780d9e02f872a",

"created_at": 1735329481,

"kind": 1,

"tags": [

[

"e",

"4e92c9fcd62931b8e2e78050df094a3033555174967ff60c5fc98980aabc0214",

"wss://relay.primal.net",

"root"

],

[

"p",

"eab0e756d32b80bcd464f3d844b8040303075a13eabc3599a762c9ac7ab91f4f"

]

],

"content": "Pharma and AI for biology will not advance as quickly as seems to be consensus imo. That isn’t to say there won’t be progress, there will. But legacy discovery pipelines will take longer to replace than people think for a variety of reasons.\n\nAI is heavily data dependent. Everyone saw the impact of Alphafold and overextrapolated the knock on effects for the rest of biotech. However, Alphafold is an exception that proved the rule. The protein data bank was exceptionally well structured, narrowly defined, and large. The ~123k structures in the PDB sample a significant fraction of the most relevant and easy to crystallize protein structures, so out of distribution structure are difficult to characterize and invalidate model predictions. AI is great at interpolating within a data distribution, and so predicting new easy-to-crystallize structures was bound to work eventually. No other database for biochemistry or cell biology is even close to as densely sampled, organized, or defined. \n\nThe main limitation is that large biochemical systems are incredibly difficult to simulate AND interrogate. The tools are bad, the readouts are bad, and the combinatorial explosion of interaction space is impossible to simulate on classical systems. Even though sequencing based technologies have revealed a lot of new biology, they are a static and destructive endpoint. I fear that it may be near-impossible and scientifically important to get a comprehensive spatial measurement over time in the same system. \n\nThe last limitation I’ll mention is that every discovery in pharma makes subsequent discoveries more difficult (referred to as eroom’s law). This is fundamentally at odds with normal tech and suggests hard limits on our ability to interrogate living systems. If this proves true, progress in biology will lag worse than people expect.\n\nWhat I think will happen is better scientific automation. “AI scientists” will automate hypothesis generation and testing. Robotics will automate and scale up brute force screening. Humans will build better in vitro models (e.g. organoids) and better measurement tools. Testing will get cheaper as tech gets better — trial and error can scale up a few more orders of magnitude. What do we get from this? There will probably a few more “wonder drugs” where we don’t understand the complete mechanism but trials demonstrate efficacy. I suspect dietary science will be a significant beneficiary from cheaper testing (democratizing access to pharma scale resources for less profitable applications).",

"sig": "e958e0c0a45b039de7d1bf3718a5c779d91d2bf6099e35f5154262d91e61369bda7e135ce3633d5541dcf2681fac09a7e48d438c4b7ef1b13345770adc92b2ad"

}