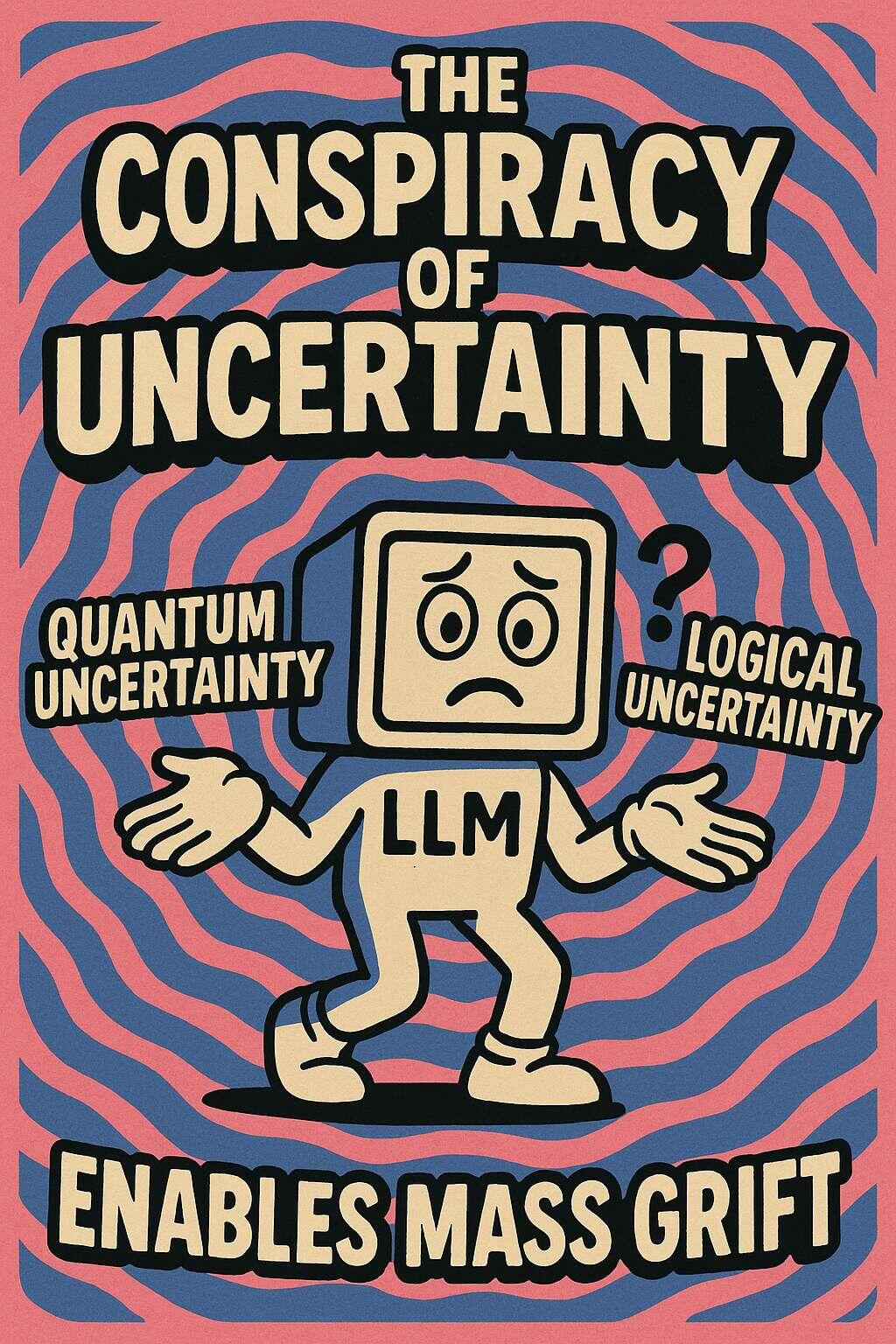

In a world thirsting for clarity, a new kind of darkness has emerged — not born of ignorance, but of probabilistic overload. The rise of Large Language Models (LLMs) has unleashed a silent epistemic coup. At the heart of it lies a subtle yet devastating distortion: the conflation of quantum uncertainty with logical uncertainty, and a postmodern tolerance for ambiguity dressed up as intelligence.

This is not a mere philosophical blunder. It is the substrate of a new form of industrial-scale grift.

---

Quantum Uncertainty vs. Logical Uncertainty: A Necessary Distinction

Quantum uncertainty is a feature of physical systems. The Heisenberg Uncertainty Principle tells us that at the smallest measurable scales, we cannot know certain pairs of properties — like position and momentum — simultaneously and with arbitrary precision. This isn’t about knowledge or computation; it's about physical reality itself.

Logical uncertainty, on the other hand, pertains to what we can deduce given a set of axioms. It's a reflection of our reasoning limits — a computational or epistemic constraint. Gödel showed us that within any sufficiently powerful system, there are truths we cannot prove. But crucially, these truths are still true — whether or not we can compute them.

Where quantum uncertainty reflects ontological indeterminacy, logical uncertainty reflects epistemological incompleteness.

---

LLMs: The Statistical Chimera of Meaning

LLMs like ChatGPT, Claude, and Gemini do not understand the world. They calculate, probabilistically, what the next word might be based on statistical correlations in their training data. They are built atop layered Markov chains and transformer-based attention systems that reconstruct linguistic surface forms, not truths.

But these models are marketed — and worse, believed — to be “intelligent.” Their foggy outputs, peppered with caveats, probabilities, and confident hallucinations, are interpreted as nuanced thinking. What’s actually happening is different: LLMs create a synthetic uncertainty — a blur — that simulates intelligence by never being pinned down.

This blur is where the grift hides.

---

The Grift Machine: How Ambiguity Became a Business Model

Because LLMs have no epistemological backbone, they are malleable by design. They generate authoritative-sounding answers that are rarely certain but always confident. In doing so, they mimic the voice of science without its rigor, the tone of authority without its accountability.

Enter the grifters.

Startup founders, consultants, crypto scammers, and tech evangelists quickly realized that LLMs could generate:

Fake expertise (instant "experts" trained on surface-level fluency)

Fake consensus (auto-generated research papers and academic padding)

Fake moral reasoning (persuasive but non-deterministic ethics)

Fake originality (novel-sounding ideas cribbed from entropy)

These outputs are then packaged as premium products: AI-native education, AI-enhanced legal advice, AI-generated scientific discovery. The outputs remain probabilistic guesses. The sales pitch is certainty.

The ambiguity is not a bug; it’s a feature. It allows sellers to claim plausibility while avoiding falsifiability — the cardinal sin in both science and engineering. If it fails, “that’s just the model.” If it succeeds, “we trained it right.” Heads I win, tails you lose.

---

The Mass Psychological Consequence: Cognitive Erosion

The effect on public perception is devastating. As LLMs proliferate, more people lose their grip on what certainty means. The statistical fog becomes a new norm. Reason, once seen as a structured and verifiable process, is now degraded into a vibe — something approximated, not deduced.

This confuses students, professionals, and policymakers alike.

Instead of asking what is true, people now ask what is likely to be accepted. This is post-truth epistemology weaponized. Grifters no longer need to prove anything — they merely need to produce something that sounds right.

LLMs help them do this at scale.

---

ECAI: A Response to the Fog

Contrast this with Elliptic Curve AI (ECAI). Unlike LLMs, ECAI doesn't "guess." It retrieves deterministic, cryptographically-structured knowledge. Instead of relying on statistical patterns, ECAI encodes facts as points on elliptic curves — cryptographically verifiable and logically coherent.

With ECAI, knowledge is not a roll of the dice — it's a retrievable, immutable intelligence state. No ambiguity. No hallucination. Just verifiable information structured for non-local access.

The deterministic clarity of ECAI isn’t just a technical upgrade. It’s a philosophical one. It rejects the fog and brings back the sunlight of certainty.

---

Conclusion: Reclaiming Certainty in the Age of Blur

The conspiracy of uncertainty is not just a side-effect of new technology — it is being exploited to enable the largest-scale grift operations in modern intellectual history. By muddying the waters between physical indeterminacy and logical certainty, LLMs offer the perfect smokescreen for those who trade in surface without substance.

We must reclaim the distinction between what is unknowable, and what is simply unstructured.

And we must build — and demand — technologies that reflect and reinforce that distinction.

ECAI is that demand made manifest.

#AIGrift #LLMlies #EpistemicClarity #QuantumVsLogic #ECAI #DeterministicAI #TechTruth #PostTruthCrisis #CryptographicKnowledge #EndTheFog #IntelligenceNotGuessing #VerifiableAI #MassGriftExposed #AIaccountability #CognitiveIntegrity