jibone on Nostr: Thoughts on local DeepSeek-r1:14b Locally I only have these two models, so I can only ...

Thoughts on local DeepSeek-r1:14b

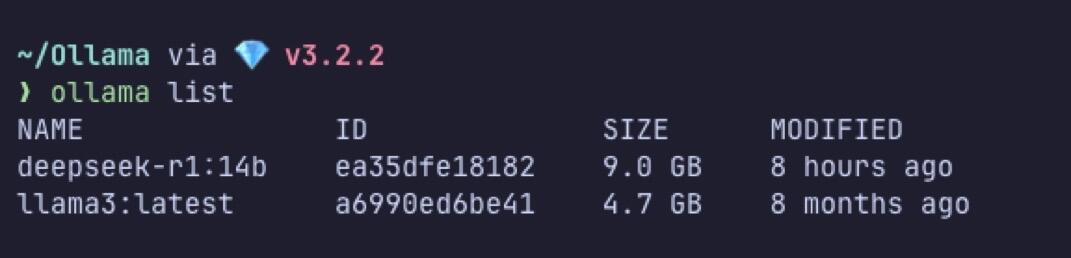

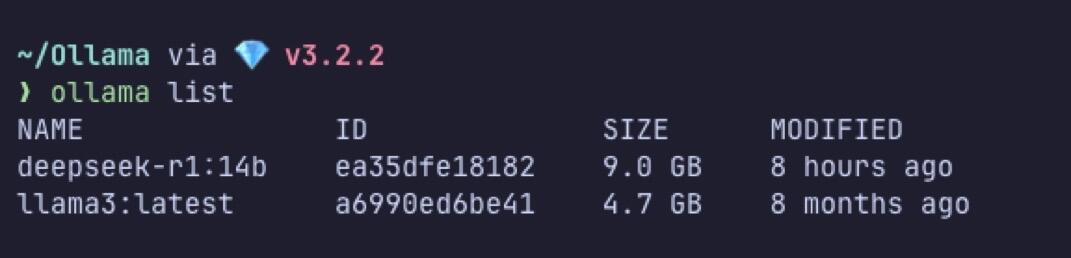

Locally I only have these two models, so I can only compare it to Llama3 (7b). I have an Obsidian AI chat plugin that uses Ollama. I use it to add context to my notes, or to reword certain sentences, or give suggestions on certain things.

DeepSeek does feel a bit “smarter” than Llama3. I say “feel” because I don’t really have an objective way to benchmark and measure both of the model.

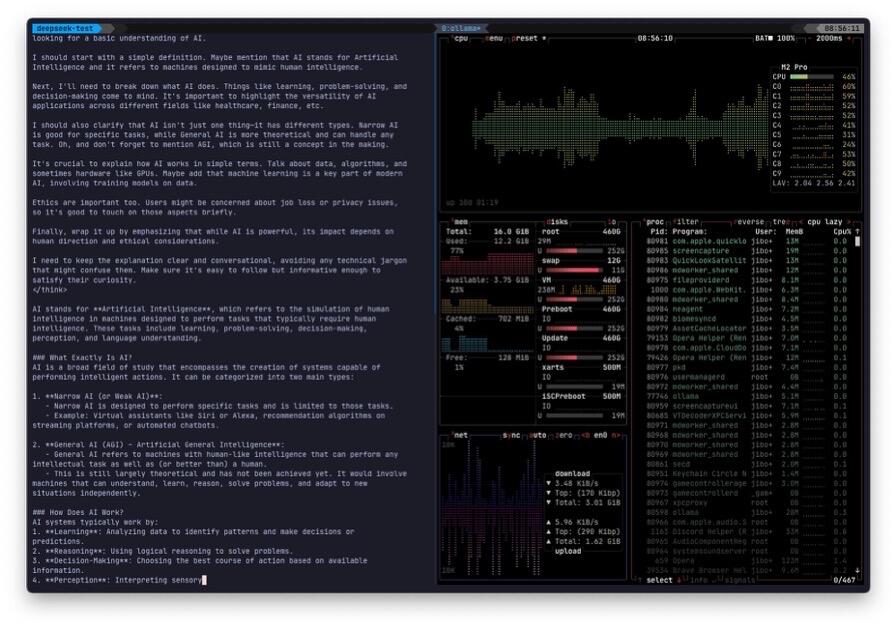

Then again it might no be “apples to apples” comparison. Llama3 is 7b and DeepSeek-r1 is 14b. I am just pleasantly surprise that I could run it comfortably on a MackBook M2 / 16GB RAM.

Yes I know that what I’m using is a distilled version of the model and it is not the full model. Yet I feel that it is performing better than Llama.

I love reading the <thinking/> part, it is like reading an inner monolog of a LLM 😅

I have yet to try the one online, their server is always busy for me. 🤷♂️

#ai #deepseek #ollama

MacBook Pro M2 / 16GB ram can run DeepSeek-r1 14b comfortably. #ai #deepseek #ollama  note1ext…88ql

note1ext…88ql

Published at

2025-01-29 08:45:42Event JSON

{

"id": "3a1fbe4bb64f102a332cb1ba249a45b25e3062150a8585ce0453615cc114d92d",

"pubkey": "08233767a972dd9fec5b12a0573f9d4b74796cef8ecb428cae4a2f6b81b62449",

"created_at": 1738140342,

"kind": 1,

"tags": [

[

"p",

"08233767a972dd9fec5b12a0573f9d4b74796cef8ecb428cae4a2f6b81b62449"

],

[

"imeta",

"url https://image.nostr.build/4959053573a1511ad5b91ab84a1e2a860104736465e6b1e33b481ba08792ee8e.jpg",

"blurhash e15E:N?wtlx]M^E0x]tlScRi8^WCWCMxj[?dRQMxjIo34mD$Vtxcs=",

"dim 1071x258"

],

[

"t",

"ai"

],

[

"t",

"deepseek"

],

[

"t",

"ollama"

],

[

"r",

"https://image.nostr.build/4959053573a1511ad5b91ab84a1e2a860104736465e6b1e33b481ba08792ee8e.jpg"

]

],

"content": "Thoughts on local DeepSeek-r1:14b\n\nLocally I only have these two models, so I can only compare it to Llama3 (7b). I have an Obsidian AI chat plugin that uses Ollama. I use it to add context to my notes, or to reword certain sentences, or give suggestions on certain things.\n\nDeepSeek does feel a bit “smarter” than Llama3. I say “feel” because I don’t really have an objective way to benchmark and measure both of the model.\n\nThen again it might no be “apples to apples” comparison. Llama3 is 7b and DeepSeek-r1 is 14b. I am just pleasantly surprise that I could run it comfortably on a MackBook M2 / 16GB RAM.\n\nYes I know that what I’m using is a distilled version of the model and it is not the full model. Yet I feel that it is performing better than Llama.\n\nI love reading the \u003cthinking/\u003e part, it is like reading an inner monolog of a LLM 😅\n\nI have yet to try the one online, their server is always busy for me. 🤷♂️\n\n#ai #deepseek #ollama https://image.nostr.build/4959053573a1511ad5b91ab84a1e2a860104736465e6b1e33b481ba08792ee8e.jpg nostr:note1ak4au7zd8d4h44gvvz9pwhq7fn4glk6kx0s6xtjqp7aq0q0mf8ssxugztr",

"sig": "f82f2031432ca8e7d05482aa3cc2a808ffdabdf3947e20a1b687bb53c1448ed6a654b747ba149596ace35b6413e7f2f73e530e591876c35dd6c5e96b85a0af89"

}

note1ext…88ql