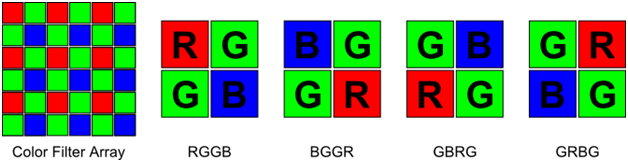

There’s are several reasons to use a monochrome camera. Regular camera’s have what’s known at a Bayer Array, a pattern of red, green, and blue pixels over the sensor that can’t be removed. Because our eyes are most sensitive to green light, in a standard Bayer array there are two green pixels for every red and blue pixel. Even if you were to convert the image to grayscale, this does not change how the light was gathered.

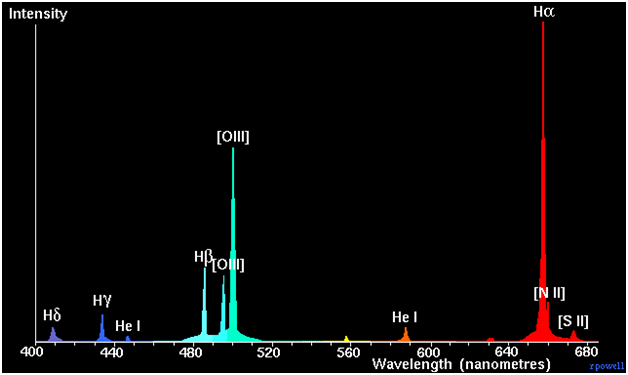

The problem with this in astrophotography is that few things emit green light (see the second image, which is the spectra of a nebula). Most nebula are ionized. Ionized gasses, like Hydrogen, Oxygen, Helium, Sulfur, etc, tend to avoid green. The closest we get is Oxygen III emissions, which is on the border or of blue and green (It’s cyan to my eyes), and Helium which has emissions in the yellow and orange range (within the window of acceptance for both red and green). But Helium emissions are not all that common to start with.

Effectively what this means that half the sensor isn’t doing any work, and so camera exposures need to go for twice as long to match a mono camera.

But to shoot in color, what you need to do is place colored filters in front of the sensor, and then combine them back together. If you know your target is mostly red and blue, you can choose exposure lengths to target blue and red while skimping green. A general rule is emission nebulas are mostly red and slightly blue, while galaxies and reflection nebula are broadband targets. So for a galaxy or a reflection nebula, try to get equal amounts of all colors (a mono camera isn’t necessarily better here), while for emssion nebula you can target just a few colors.

The other advantage is that you can choose to use no filter to get what’s called a luminance frame. This means that all of the light that falls into the sensor gets recorded. This helps pick out ultra faint details that rgb cameras would struggle to reach.

Lastly, because the camera shoots in mono, this means that you can also use narrow band filters to target specific wavelength of light. This is called narrow-band imaging, and it's especially popular in light polluted areas. The results from are vivid, but not true color images. Look up Hubble Pallette for examples of this.

As for bw film, you can use it. I’ve thought about it myself, since I have an old Nikon FE2. The general problem with film however is that it’s less sensitive to light than a mono CCD or CMOS camera is. The quantum efficiency (a percentage of light that falls onto it that gets recorded) is around 10%, while its around 60~90% for a digital camera. The other problem is reciprocity. Pixels on a digital sensor has a “well”, meaning that it can record multiple photons over a single exposure. Film uses light sensitive crystals, and once it detects a photon, it will not record another one. This leads to something called Film reciprocity Failure, it changes for every object and it’s impossible to know if you got it right. I think this would get harder with filters.

A trick that some people use for digital cameras is to use two cameras. The first is a monocamera that records luminance, and the second is an RGB camera for color. Then the two images are combined. I think it would be easy to mix BW film and then Color film in this way.