Leon Brocard on Nostr: Do large language models break up text into smaller fragments similar to how humans ...

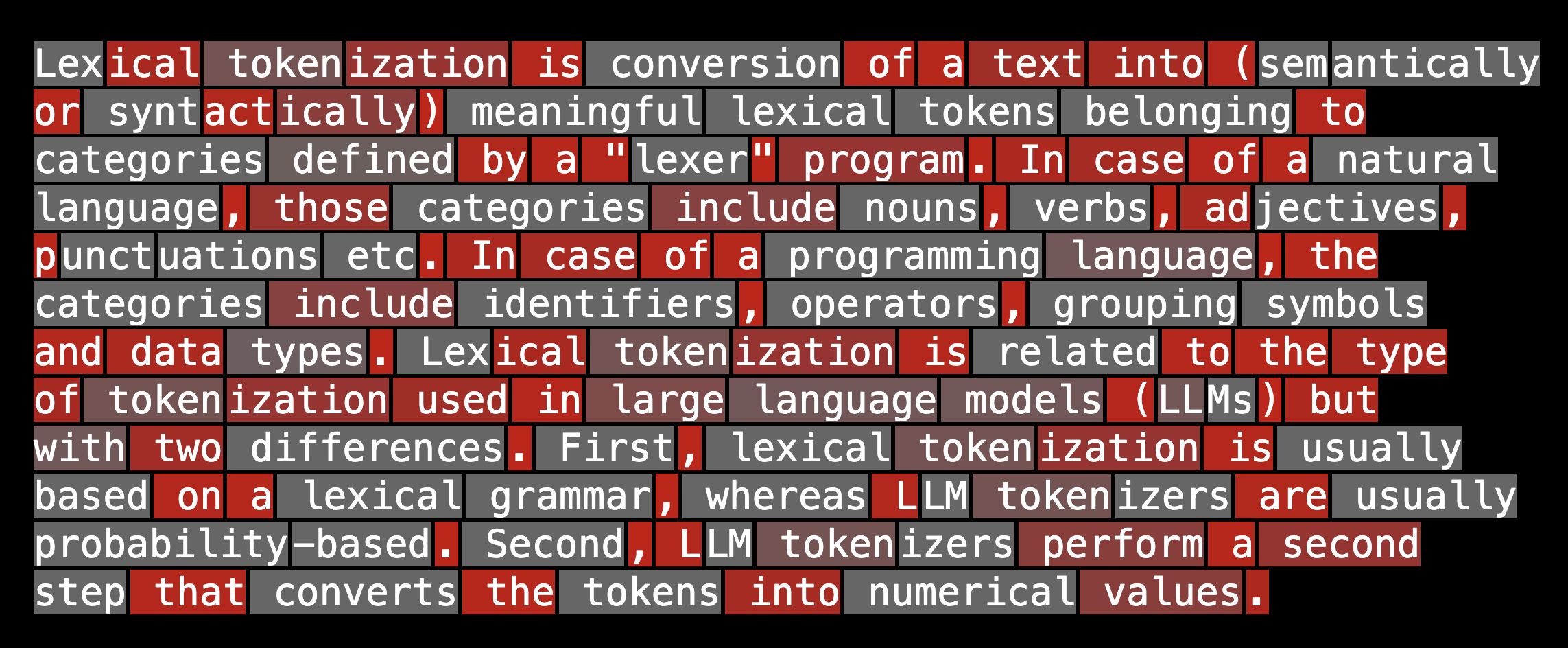

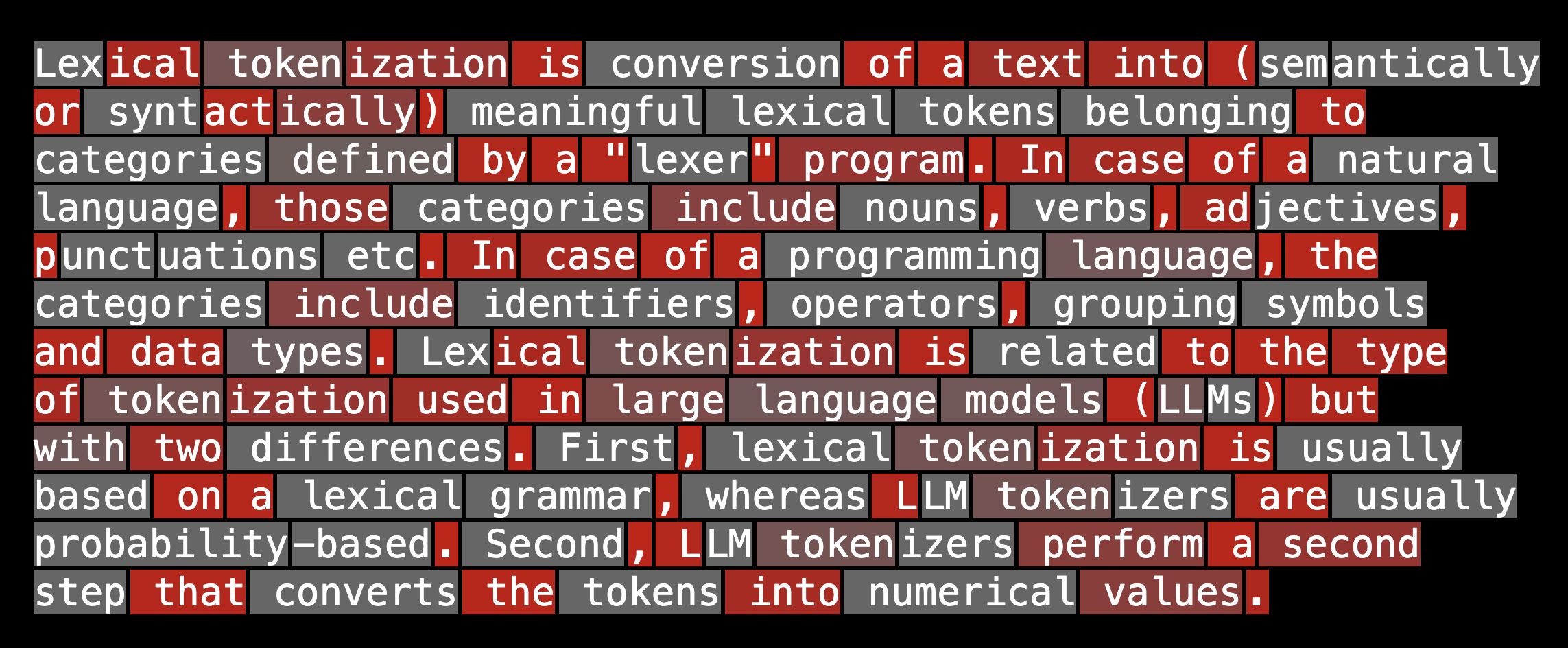

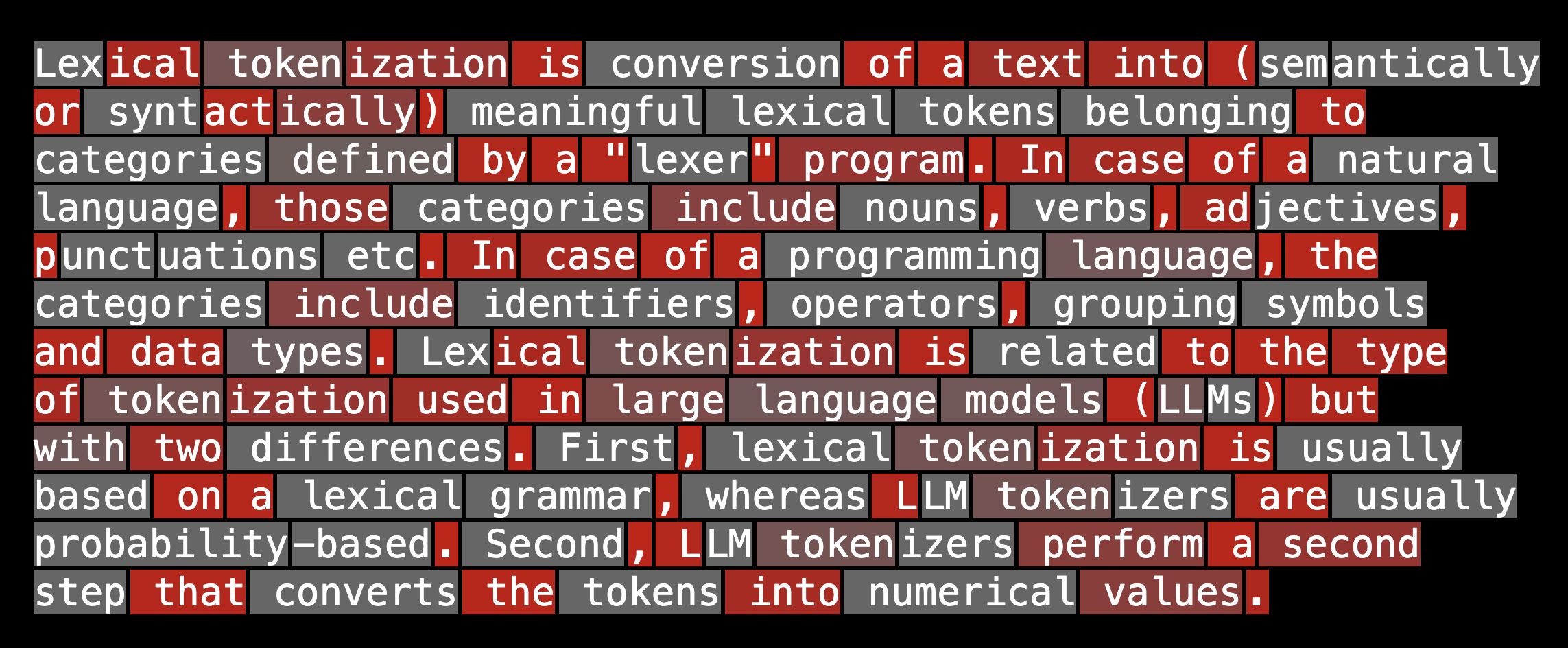

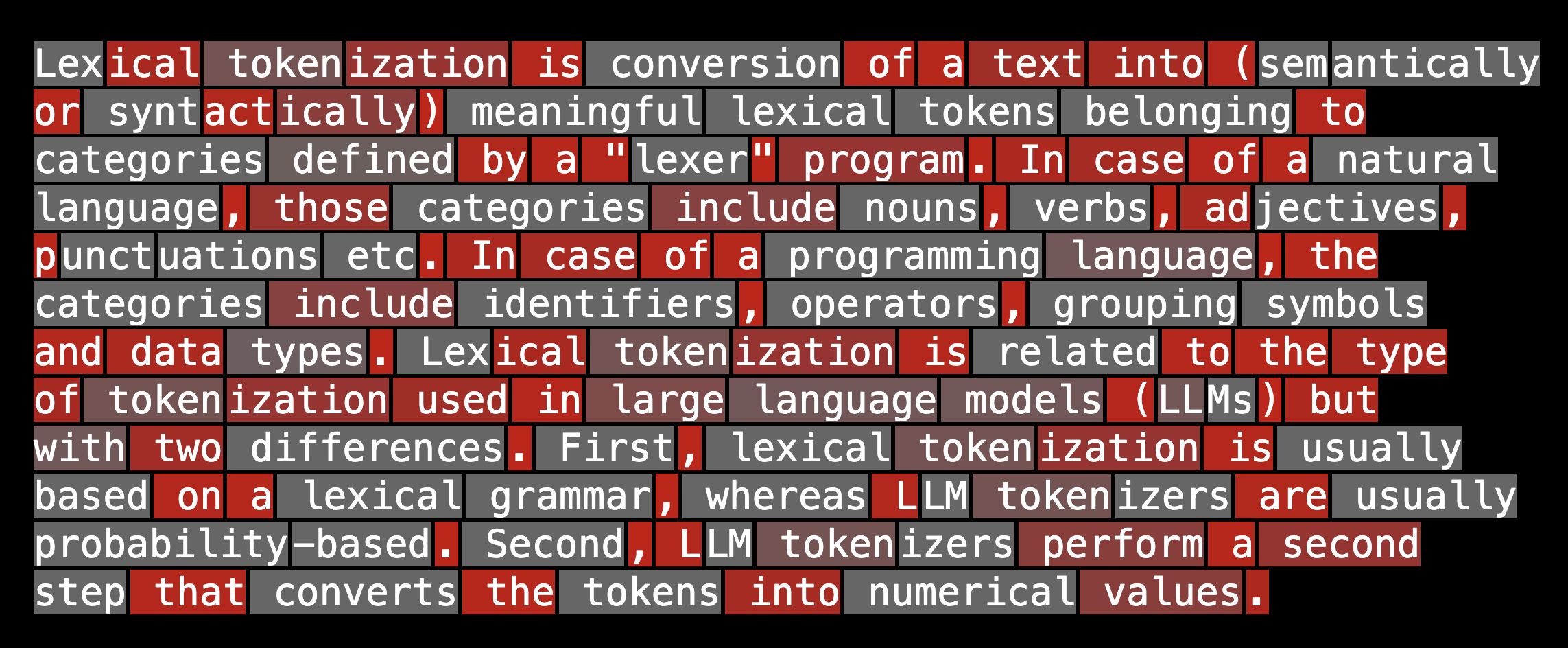

Do large language models break up text into smaller fragments similar to how humans do? To investigate this, I used ChatGPT-4o's tokenizer (o200k_base) to tokenize the first paragraph from

https://en.wikipedia.org/wiki/Lexical_analysis. The boxes are tokens. Common (lower-valued) tokens are coloured red and rarer (higher-valued) tokens are coloured grey. #chatgpt

Published at

2024-07-29 07:23:41Event JSON

{

"id": "82c53d920381e4ff13aad36d189dc00d1296855da0f278560f87c62961c81cee",

"pubkey": "d40fb526963579ad355bd1ce2d7831553f089d9e95d5f606b381dcccaeb273a5",

"created_at": 1722237821,

"kind": 1,

"tags": [

[

"t",

"chatgpt"

],

[

"proxy",

"https://fosstodon.org/users/orangeacme/statuses/112868577848414395",

"activitypub"

]

],

"content": "Do large language models break up text into smaller fragments similar to how humans do? To investigate this, I used ChatGPT-4o's tokenizer (o200k_base) to tokenize the first paragraph from https://en.wikipedia.org/wiki/Lexical_analysis. The boxes are tokens. Common (lower-valued) tokens are coloured red and rarer (higher-valued) tokens are coloured grey. #chatgpt\n\nhttps://cdn.fosstodon.org/media_attachments/files/112/868/565/684/175/558/original/2d8fc0fb42a0de44.png",

"sig": "f60dc8cc6958537258f21688bd926e39a589eb6eefa8a4fcd750ba14a6d198e0c797f03db27d24baa937373c07fc6557893581d8a7da4b2be848d39037712fd4"

}