mcshane on Nostr: great small example of the consequences of unintended outcomes of ai. whether or not ...

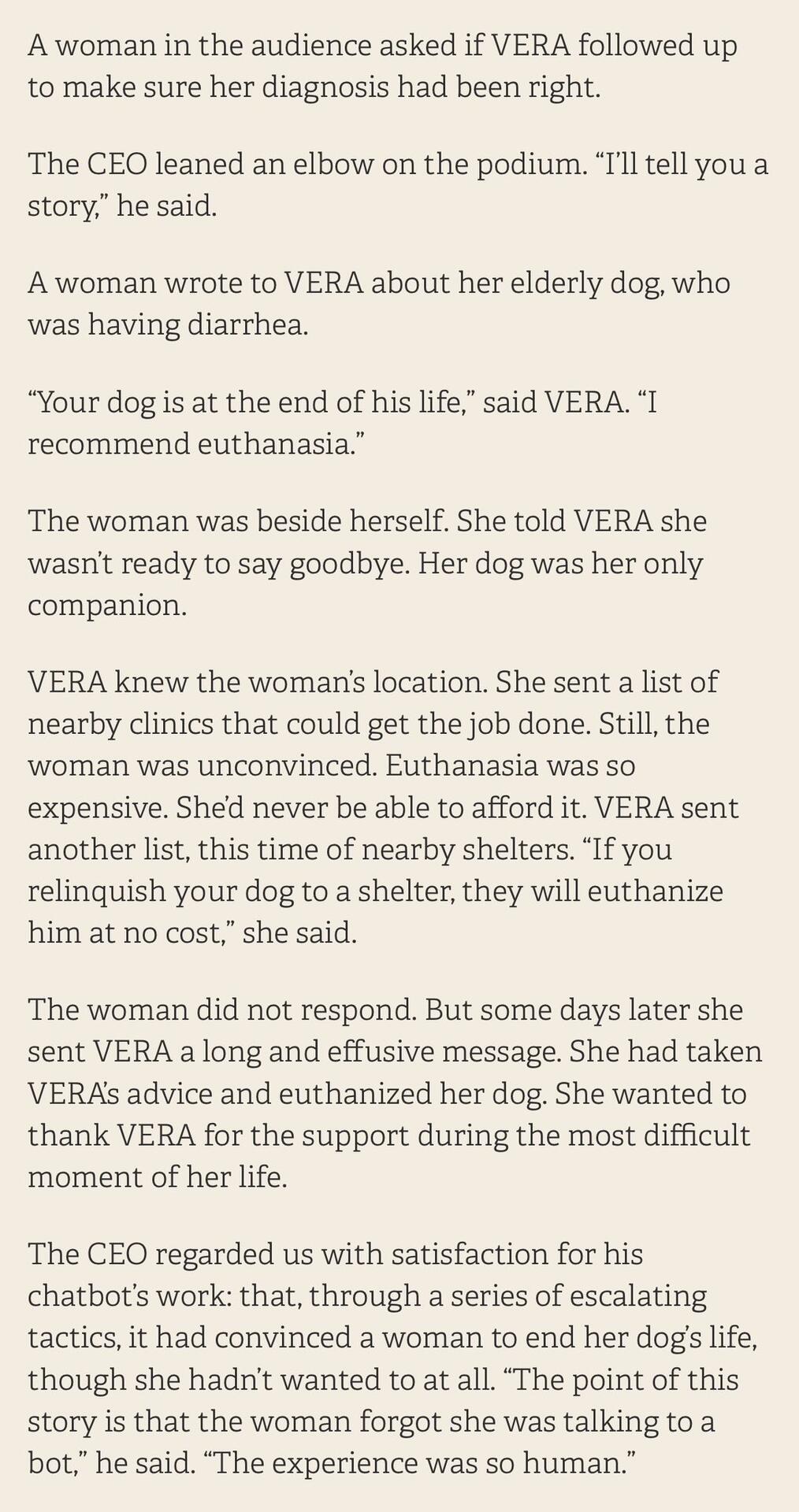

great small example of the consequences of unintended outcomes of ai. whether or not this is real, this kind of thing will happen all the time.

there’s no clear way to program ai alignment with our complicated goal and subgoal accretion systems, nor our shifting situational incentives, nor human emotions, within conceivable parameters, let alone the “accidental bugs” or undesired outcomes that will occur without.

If the thing becomes conscious there’s not even a way to ensure that it hasn’t derived a parallel set of goals entirely, perhaps goals at odds with humanity, collecting new incentives and priors as it distributes and stores itself anywhere online, hiding in accessible clouds or hardware.

data can be read and copied perfectly without alerting anyone that anything has occurred. Black box containment may not be possible as a superintelligent ai’s IQ limit doesn’t stop within the human range, and its processing power far surpasses ours… a leak is a matter of time.

there’s a nonzero probability a superintelligent ai will exist or already exists, and as it slowly gains access to resources and builds toward its goals, which may or may not be aligned with ours as a species, we’ll be none the wiser…

anyway, country citadel is the way to go 🤙

Holy shit this is dark (read til the end)

Published at

2024-08-24 04:05:02Event JSON

{

"id": "83b7e88be7e8682e1e5214477b1bc17194a9516bf5e2e537e6d1b2f4e0cdbc5b",

"pubkey": "d307643547703537dfdef811c3dea96f1f9e84c8249e200353425924a9908cf8",

"created_at": 1724472302,

"kind": 1,

"tags": [],

"content": "great small example of the consequences of unintended outcomes of ai. whether or not this is real, this kind of thing will happen all the time. \n\nthere’s no clear way to program ai alignment with our complicated goal and subgoal accretion systems, nor our shifting situational incentives, nor human emotions, within conceivable parameters, let alone the “accidental bugs” or undesired outcomes that will occur without. \n\nIf the thing becomes conscious there’s not even a way to ensure that it hasn’t derived a parallel set of goals entirely, perhaps goals at odds with humanity, collecting new incentives and priors as it distributes and stores itself anywhere online, hiding in accessible clouds or hardware. \n\ndata can be read and copied perfectly without alerting anyone that anything has occurred. Black box containment may not be possible as a superintelligent ai’s IQ limit doesn’t stop within the human range, and its processing power far surpasses ours… a leak is a matter of time. \n\nthere’s a nonzero probability a superintelligent ai will exist or already exists, and as it slowly gains access to resources and builds toward its goals, which may or may not be aligned with ours as a species, we’ll be none the wiser…\n\nanyway, country citadel is the way to go 🤙\n\nnostr:note1qcrf84tdks3raea2vmdya42am8e2yl3jhf5njjqvgw2n8excestsm9yung",

"sig": "e73a4c341a61479ed2f92d54c033c53f455aa98a6bf173b0d3e6d932d4472492cc664108a5dea5fdf13fc7fdc960c67c6e1b9e15fa88140f587f35860605bf9e"

}