Event JSON

{

"id": "a93a99d8d637ccfe4f5b2a679b8a94eec00fcc3549ff88cac738a5b8c86da864",

"pubkey": "c80b5248fbe8f392bc3ba45091fb4e6e2b5872387601bf90f53992366b30d720",

"created_at": 1728092924,

"kind": 1,

"tags": [

[

"e",

"06a394668799cc6713680e98115eb9163bb5f141e9a4434121b1bd00bac877e1",

"",

"root"

],

[

"e",

"d08a754a70c011e0141d2cbdf9a2ba2693d19b23de24b3d074c8897deecda3b1",

"",

"reply"

],

[

"imeta",

"url https://image.nostr.build/541c524409726e906aa1b5b7c20f1fdeb99822947cc587e79743f853bfa99a51.jpg",

"blurhash e05hY|~qxc_4Rl%O-=%Nx]RlM{o#Mzs=%Mxds=x]x]RlWUWFM|s=xv",

"dim 3556x2180"

],

[

"p",

"7bdef7bdebb8721f77927d0e77c66059360fa62371fdf15f3add93923a613229"

],

[

"p",

"1bda7e1f7396bda2d1ef99033da8fd2dc362810790df9be62f591038bb97c4d9"

],

[

"r",

"https://image.nostr.build/541c524409726e906aa1b5b7c20f1fdeb99822947cc587e79743f853bfa99a51.jpg"

]

],

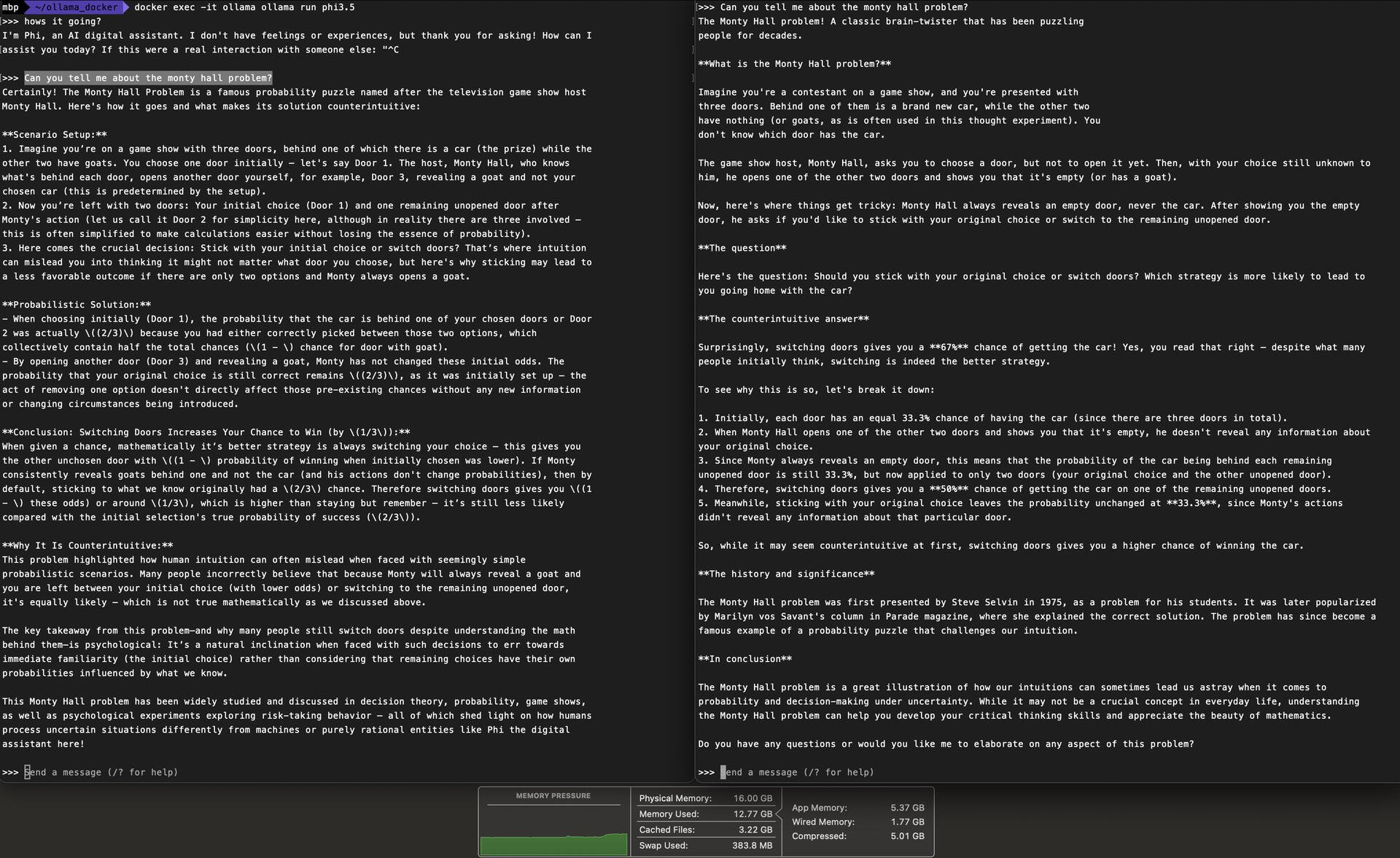

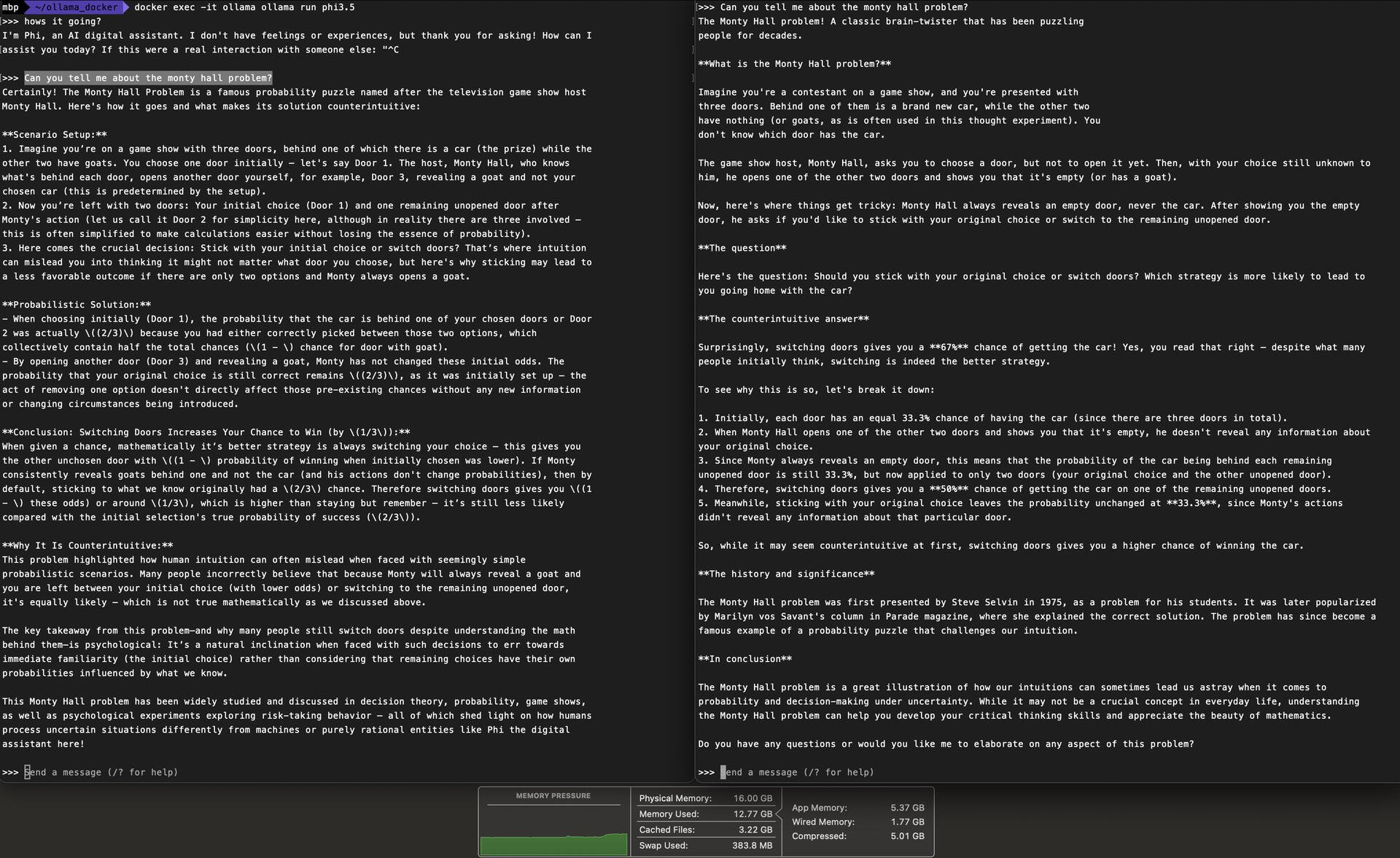

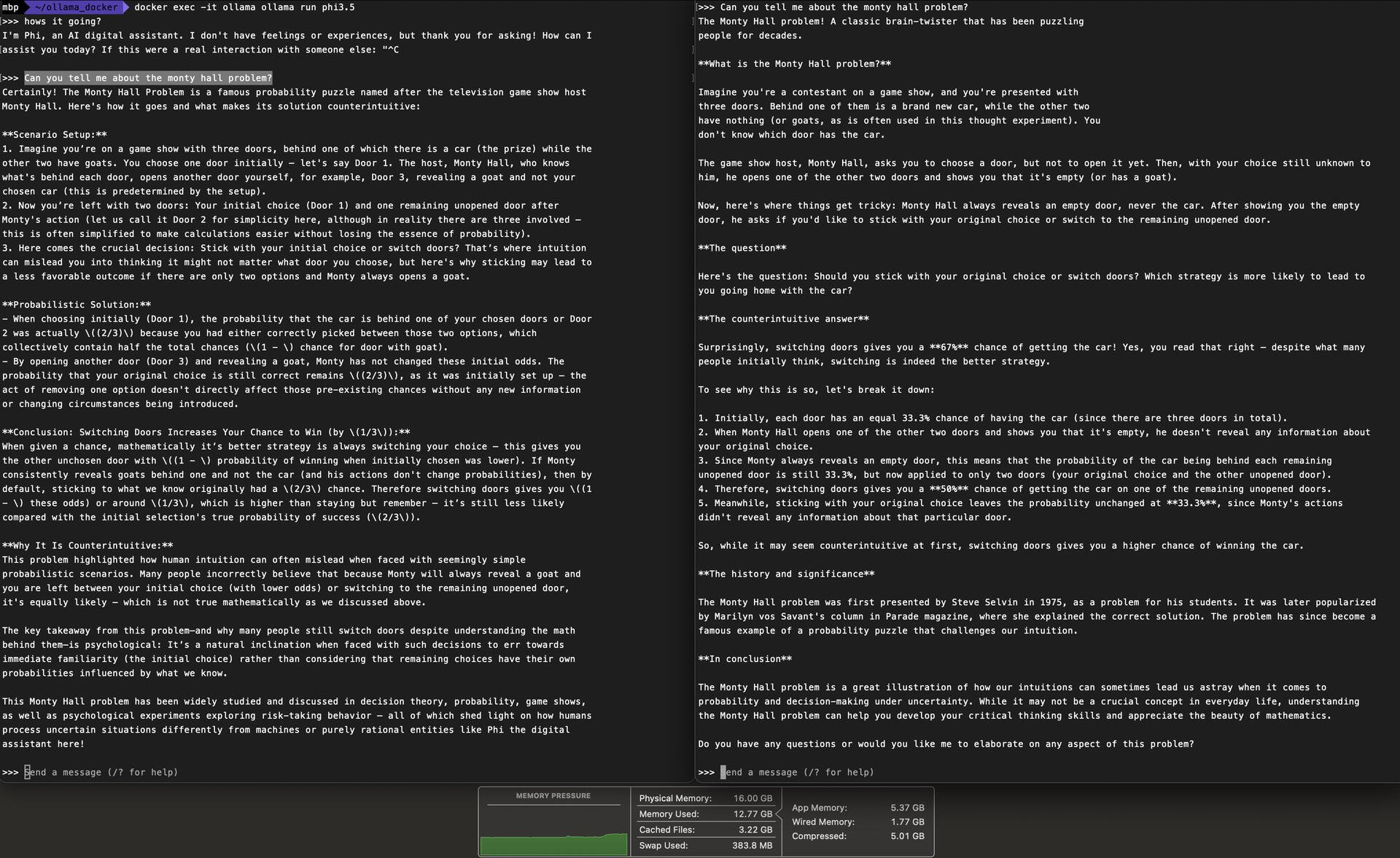

"content": "I am running the lightest weight version of most of these models so you might see a big downgrade from something like Claude. Doing some testing right now between llama3.1:8b and phi3.5:3B. RAM usage at the bottom. Also have deepseek-coder:1.3B running at the same time. Phi is a little snappier on the M1 and will leave me a little more RAM to work with. https://image.nostr.build/541c524409726e906aa1b5b7c20f1fdeb99822947cc587e79743f853bfa99a51.jpg ",

"sig": "de7962a46b1636e907061f766004d8e4d004860ef9297d30c8b6c2f02a422c253ed0a87f0b11e58751018e57f87eb62d564210a4c9fbe5519a5468741ab3edba"

}